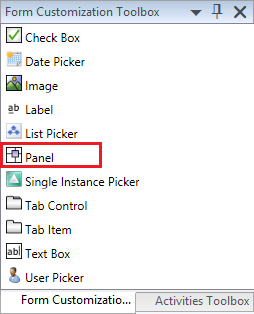

Anyone who’s done some kind of form editing with the Authoring Tool knows that we only have a small number of controls to use when creating our customizations. One particular control that I’ve been missing is the Stack Panel. You know, the container object in which you place other objects and in which the Stack Panel handles the placement for all sub-controls?

As it turns out, you can “create” the Stack Panel without using Visual Studio with a small XML modification.

This is how you would do it:

- Open the Authoring Tool and open the form you would like to add the Stack Panel to

- Add the control named Panel to the place where you would like to have your Stack Panel. Do not do any other modifications to this control at this time!

- Save the Management Pack and open it in an XML editor (I use Notepad++)

- Locate the Panel control (which actually is a Grid) that we added. This should be at the bottom of the <Customization> tag if you didn’t do any other form customizations after you added the control and should like similar to this:

<AddControl Parent=”StackPanel205″ Assembly=”PresentationFramework, Version=3.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35″ Type=”System.Windows.Controls.Grid” Left=”0″ Top=”0″ Right=”0″ Bottom=”0″ Row=”0″ Column=”0″ />

- To convert the Grid to a Stack Panel, simply change the word Grid in type, to StackPanel. In the example above the code would look like this after the change:

<AddControl Parent=”StackPanel205″ Assembly=”PresentationFramework, Version=3.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35″ Type=”System.Windows.Controls.StackPanel” Left=”0″ Top=”0″ Right=”0″ Bottom=”0″ Row=”0″ Column=”0″ />

- Save the file and reload (close and open) the MP in your Authoring Tool. The Panel should now be a Stack Panel and you can go ahead and do the rest of your customizations!